Nvidia's 'Audio2Face' tech uses AI to generate lip-synced facial animations for audio files

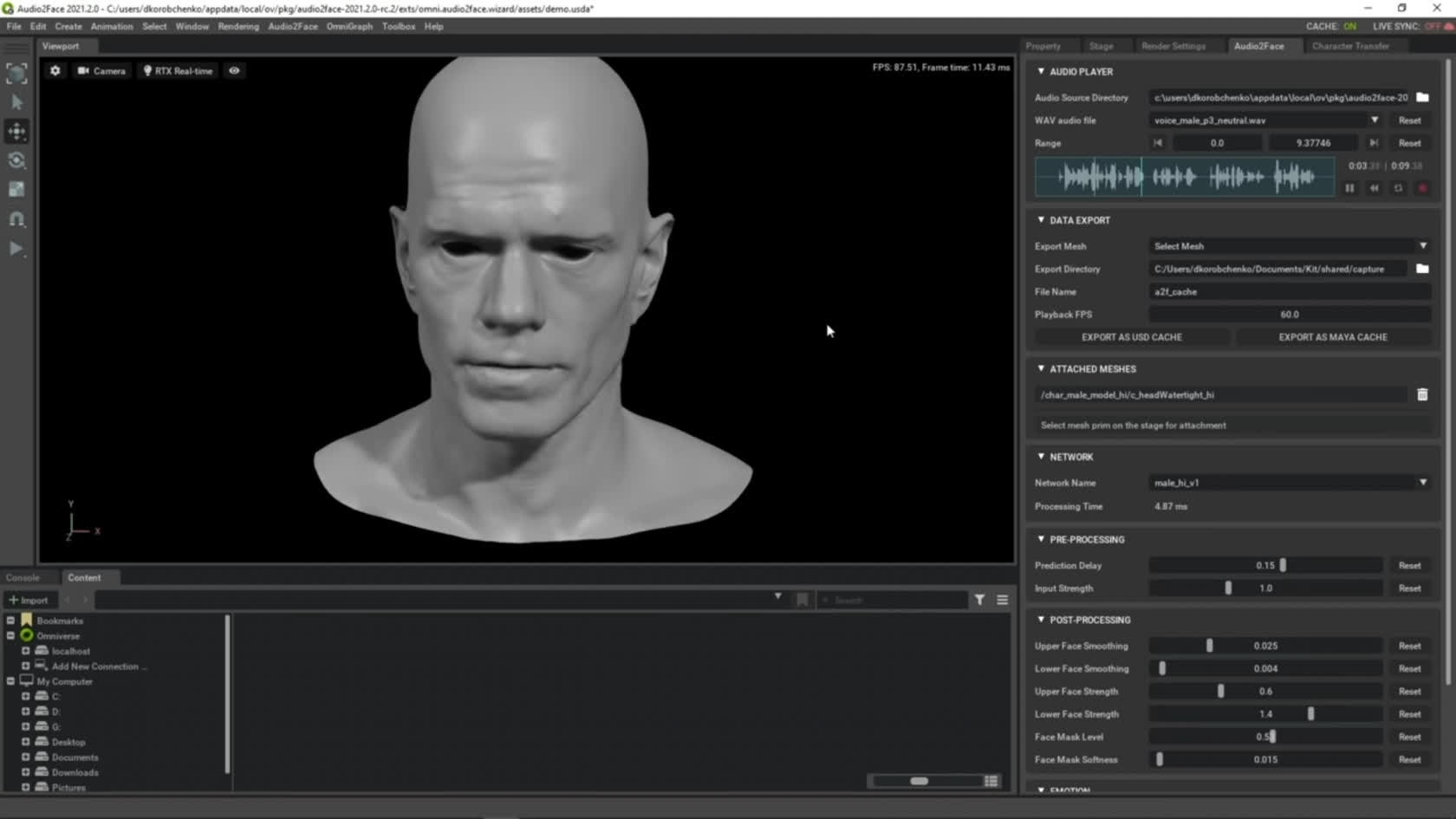

AI innovation: Game development is an incredibly time-consuming and expensive process, with fine art and animation budgets often eating upwardly a hefty chunk of a team'southward greenbacks reserves. Believable facial animation, in detail, is disquisitional for cutscene-heavy titles. That's why Nvidia is working on an AI-based tool that can read audio files and create matching facial animations in existent-time; no mocap is required.

This tech, called "Audio2Face," has been in beta for several months now. Information technology didn't seem to become much attention until more than recently, despite its potentially-revolutionary implications for game developers (or simply animators as a whole).

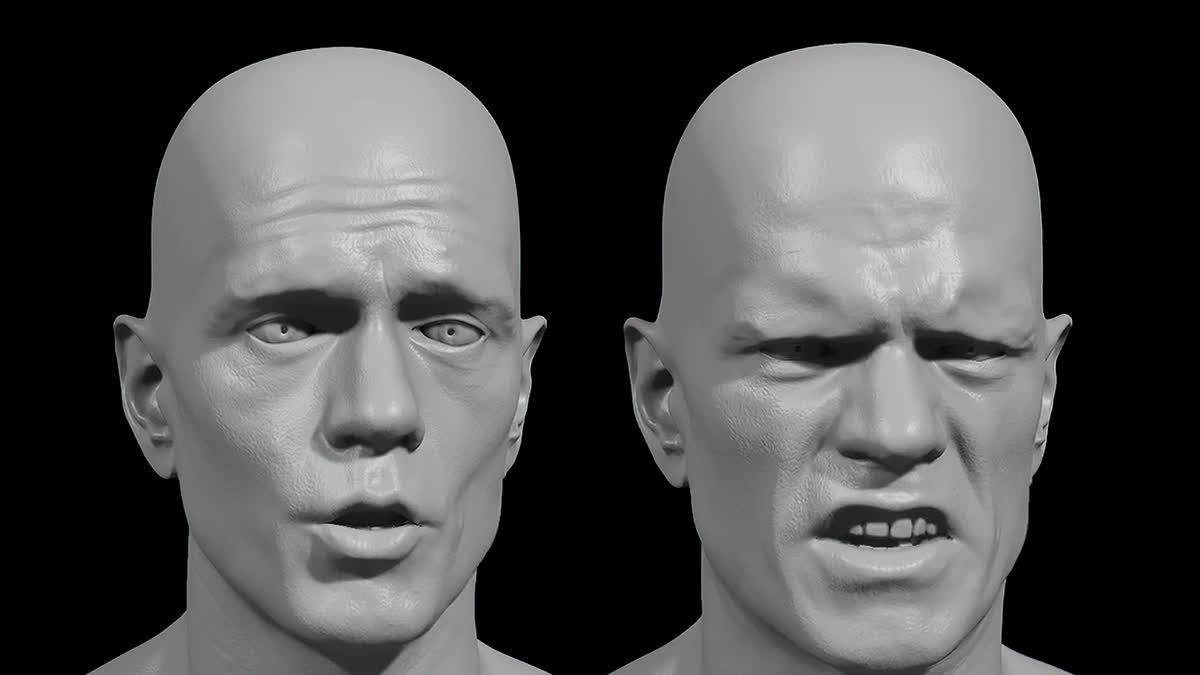

Equally you'd probably expect from whatever technology that is both powered by AI and however in beta, Audio2Face isn't perfect. The quality of the source audio will heavily impact the quality of the tech'southward lip-syncing, and information technology doesn't seem to exercise a very good job of capturing facial emotions. No matter what judgement you throw at Audio2Face'south default "Digital Mark" grapheme, the eyes, cheeks, ears, and nose all remain fairly static. There is some movement, but it's generally more subdued than the lip animations, which are clearly the chief focus.

But maybe that'south a skilful thing. Carrying authentic emotions in the face of a 3D character is what animators train for years to achieve. Depending on how easy this tool is to implement into a given developer's workflow, it could provide serviceable, peradventure placeholder lip-sync animations while letting animators focus on other parts of a character's face.

Some of our readers, or simply hardcore fans of Cyberpunk 2077, may recall that CDPR's championship used like technology chosen "JALI." It used AI to automatically lip-sync dialogue across all of the game'southward primary supported languages (those with both subtitles and vox acting), lifting the burden from the animators themselves.

Audio2Face doesn't have that capability, as far as we tin tell, but it still looks useful. If nosotros hear of any instances where a programmer has taken advantage of the tech, nosotros'll let you know. If yous want to requite it a spin yourself, the open beta is bachelor for download, but just know that you'll need an RTX GPU of some kind for it to function properly.

Source: https://www.techspot.com/news/92135-nvidia-audio2face-tech-uses-ai-generate-lip-synced.html

Posted by: morrislible1943.blogspot.com

0 Response to "Nvidia's 'Audio2Face' tech uses AI to generate lip-synced facial animations for audio files"

Post a Comment